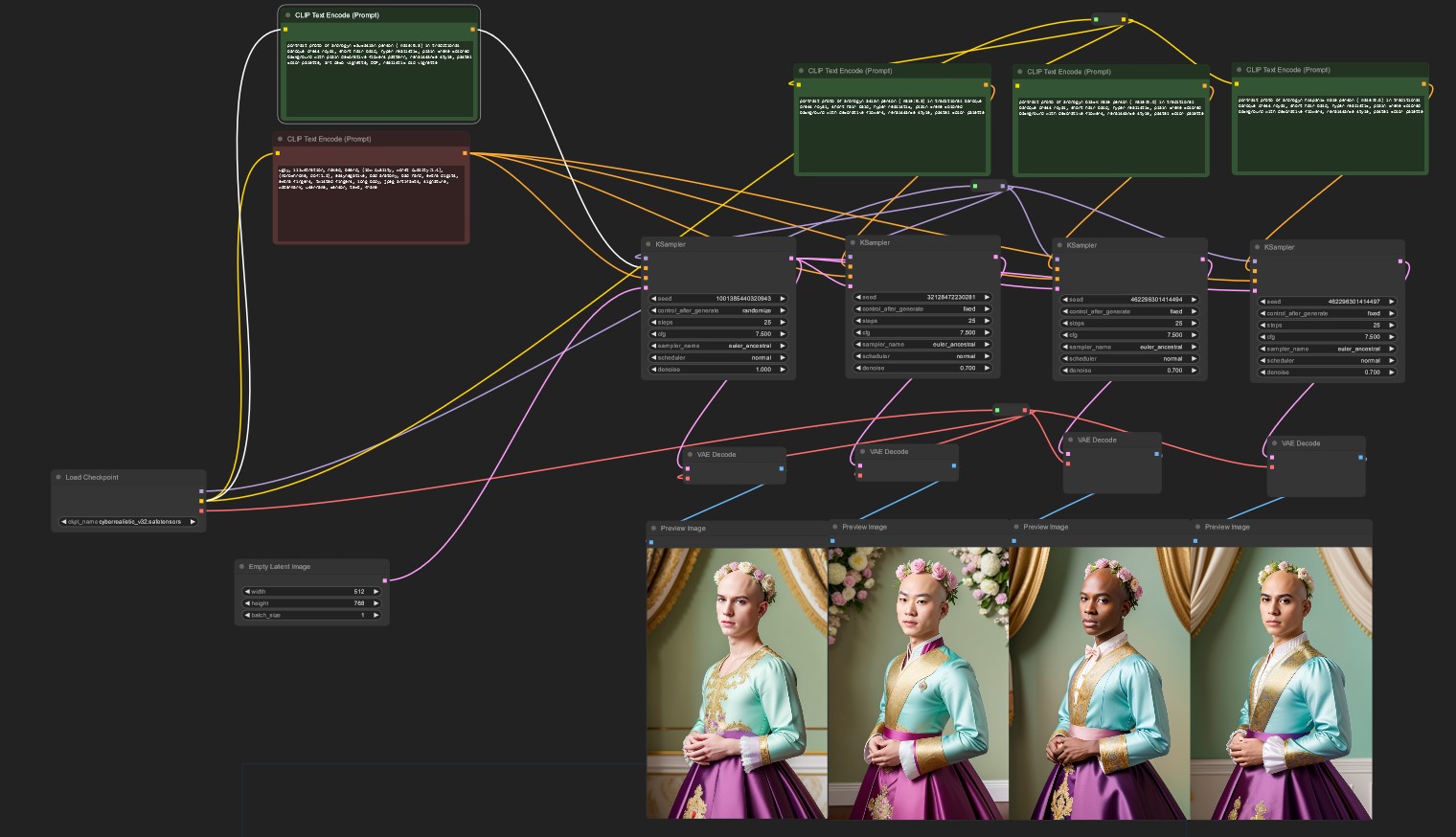

If you have ComfyUI running – you can use the complete workflow for your own experiments. ( just copy the …

If you have ComfyUI running – you can use the complete workflow for your own experiments. ( just copy the text below in a new file *.json file and load it with comfyUI)

{

"last_node_id": 26,

"last_link_id": 63,

"nodes": [

{

"id": 4,

"type": "EmptyLatentImage",

"pos": [

-16,

-387

],

"size": {

"0": 315,

"1": 106

},

"flags": {},

"order": 0,

"mode": 0,

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

3

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "EmptyLatentImage"

},

"widgets_values": [

512,

768,

1

]

},

{

"id": 25,

"type": "Reroute",

"pos": [

1481,

-1190

],

"size": [

75,

26

],

"flags": {},

"order": 2,

"mode": 0,

"inputs": [

{

"name": "",

"type": "*",

"link": 51

}

],

"outputs": [

{

"name": "",

"type": "MODEL",

"links": [

52,

53,

54,

55

],

"slot_index": 0

}

],

"properties": {

"showOutputText": false,

"horizontal": false

}

},

{

"id": 8,

"type": "KSampler",

"pos": [

1227,

-1046

],

"size": {

"0": 315,

"1": 262

},

"flags": {},

"order": 12,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 53

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 38

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 13

},

{

"name": "latent_image",

"type": "LATENT",

"link": 12

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

16

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "KSampler"

},

"widgets_values": [

32128472230281,

"fixed",

25,

7.5,

"euler_ancestral",

"normal",

0.7

]

},

{

"id": 14,

"type": "KSampler",

"pos": [

1648,

-1041

],

"size": {

"0": 315,

"1": 262

},

"flags": {},

"order": 13,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 54

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 39

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 23

},

{

"name": "latent_image",

"type": "LATENT",

"link": 35

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

26

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "KSampler"

},

"widgets_values": [

462296301414494,

"fixed",

25,

7.5,

"euler_ancestral",

"normal",

0.7

]

},

{

"id": 1,

"type": "CheckpointLoaderSimple",

"pos": [

-389,

-569

],

"size": {

"0": 315,

"1": 98

},

"flags": {},

"order": 1,

"mode": 0,

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"links": [

51

],

"shape": 3,

"slot_index": 0

},

{

"name": "CLIP",

"type": "CLIP",

"links": [

1,

5,

44

],

"shape": 3,

"slot_index": 1

},

{

"name": "VAE",

"type": "VAE",

"links": [

58

],

"shape": 3,

"slot_index": 2

}

],

"properties": {

"Node name for S&R": "CheckpointLoaderSimple"

},

"widgets_values": [

"cyberrealistic_v32.safetensors"

]

},

{

"id": 10,

"type": "VAEDecode",

"pos": [

1247,

-621

],

"size": {

"0": 210,

"1": 46

},

"flags": {},

"order": 16,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 16

},

{

"name": "vae",

"type": "VAE",

"link": 60

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

17

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "VAEDecode"

}

},

{

"id": 26,

"type": "Reroute",

"pos": [

1526,

-734

],

"size": [

75,

26

],

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "",

"type": "*",

"link": 58

}

],

"outputs": [

{

"name": "",

"type": "VAE",

"links": [

59,

60,

61,

62

],

"slot_index": 0

}

],

"properties": {

"showOutputText": false,

"horizontal": false

}

},

{

"id": 15,

"type": "VAEDecode",

"pos": [

1670,

-645

],

"size": [

199.4270856873061,

94.88382538094004

],

"flags": {},

"order": 17,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 26

},

{

"name": "vae",

"type": "VAE",

"link": 61

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

25

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "VAEDecode"

}

},

{

"id": 6,

"type": "VAEDecode",

"pos": [

896,

-615

],

"size": {

"0": 210,

"1": 46

},

"flags": {},

"order": 11,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 9

},

{

"name": "vae",

"type": "VAE",

"link": 59

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

11

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "VAEDecode"

}

},

{

"id": 21,

"type": "VAEDecode",

"pos": [

2085,

-638

],

"size": {

"0": 199.4270782470703,

"1": 94.88382720947266

},

"flags": {},

"order": 18,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 42

},

{

"name": "vae",

"type": "VAE",

"link": 62

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

43

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "VAEDecode"

}

},

{

"id": 3,

"type": "KSampler",

"pos": [

811,

-1043

],

"size": {

"0": 315,

"1": 262

},

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 52

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 8

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 7

},

{

"name": "latent_image",

"type": "LATENT",

"link": 3

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

9,

12,

35,

56

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "KSampler"

},

"widgets_values": [

1001385440320943,

"randomize",

25,

7.5,

"euler_ancestral",

"normal",

1

]

},

{

"id": 5,

"type": "CLIPTextEncode",

"pos": [

62,

-1257

],

"size": {

"0": 400,

"1": 200

},

"flags": {},

"order": 4,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 5

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

7,

13,

23,

63

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"ugly, illustration, naked, beard, (low quality, worst quality:1.4), (monochrome, dof:1.2), easynegative, bad anatomy, bad hand, extra digits, extra fingers, twisted fingers, long body, jpeg artifacts, signature, watermark, username, censor, text, frame"

],

"color": "#322",

"bgcolor": "#533",

"shape": 2

},

{

"id": 11,

"type": "PreviewImage",

"pos": [

1191,

-468

],

"size": [

369.76458740234375,

582.3216552734375

],

"flags": {},

"order": 19,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 17

}

],

"properties": {

"Node name for S&R": "PreviewImage"

}

},

{

"id": 16,

"type": "PreviewImage",

"pos": [

1560,

-468

],

"size": {

"0": 369.76458740234375,

"1": 582.3216552734375

},

"flags": {},

"order": 20,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 25

}

],

"properties": {

"Node name for S&R": "PreviewImage"

}

},

{

"id": 22,

"type": "PreviewImage",

"pos": [

1929,

-468

],

"size": {

"0": 369.76458740234375,

"1": 582.3216552734375

},

"flags": {},

"order": 21,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 43

}

],

"properties": {

"Node name for S&R": "PreviewImage"

}

},

{

"id": 7,

"type": "PreviewImage",

"pos": [

822,

-465

],

"size": [

370.067638050426,

576.1857799183238

],

"flags": {},

"order": 15,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 11

}

],

"properties": {

"Node name for S&R": "PreviewImage"

}

},

{

"id": 20,

"type": "KSampler",

"pos": [

2050,

-1037

],

"size": {

"0": 315,

"1": 262

},

"flags": {},

"order": 14,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 55

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 40

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 63

},

{

"name": "latent_image",

"type": "LATENT",

"link": 56

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

42

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "KSampler"

},

"widgets_values": [

462296301414497,

"fixed",

25,

7.5,

"euler_ancestral",

"normal",

0.7

]

},

{

"id": 9,

"type": "CLIPTextEncode",

"pos": [

1122,

-1396

],

"size": {

"0": 400,

"1": 200

},

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 46

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

38

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"portrait photo of androgyn asian person ( male:0.3) in traditional baroque dress royal, short hair bald, hyper realistic, plain creme colored background with decorative flowers, renaissance style, pastel color palette"

],

"color": "#232",

"bgcolor": "#353"

},

{

"id": 12,

"type": "CLIPTextEncode",

"pos": [

1567,

-1394

],

"size": {

"0": 400,

"1": 200

},

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 47

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

39

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"portrait photo of androgyn black male person ( male:0.6) in traditional baroque dress royal, short hair bald, hyper realistic, plain creme colored background with decorative flowers, renaissance style, pastel color palette"

],

"color": "#232",

"bgcolor": "#353"

},

{

"id": 19,

"type": "CLIPTextEncode",

"pos": [

2013,

-1398

],

"size": {

"0": 400,

"1": 200

},

"flags": {},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 49

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

40

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"portrait photo of androgyn hispanic male person ( male:0.6) in traditional baroque dress royal, short hair bald, hyper realistic, plain creme colored background with decorative flowers, renaissance style, pastel color palette"

],

"color": "#232",

"bgcolor": "#353"

},

{

"id": 23,

"type": "Reroute",

"pos": [

1727,

-1529

],

"size": [

75,

26

],

"flags": {},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "",

"type": "*",

"link": 44

}

],

"outputs": [

{

"name": "",

"type": "CLIP",

"links": [

46,

47,

49

],

"slot_index": 0

}

],

"properties": {

"showOutputText": false,

"horizontal": false

}

},

{

"id": 2,

"type": "CLIPTextEncode",

"pos": [

78,

-1509

],

"size": {

"0": 400,

"1": 200

},

"flags": {},

"order": 3,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 1

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

8

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"portrait photo of androgyn caucasian person ( male:0.3) in traditional baroque dress royal, short hair bald, hyper realistic, plain creme colored background with plain decorative flowers pattern, renaissance style, pastel color palette, art deco vignette, DOF, realistic old vignette"

],

"color": "#232",

"bgcolor": "#353"

}

],

"links": [

[

1,

1,

1,

2,

0,

"CLIP"

],

[

3,

4,

0,

3,

3,

"LATENT"

],

[

5,

1,

1,

5,

0,

"CLIP"

],

[

7,

5,

0,

3,

2,

"CONDITIONING"

],

[

8,

2,

0,

3,

1,

"CONDITIONING"

],

[

9,

3,

0,

6,

0,

"LATENT"

],

[

11,

6,

0,

7,

0,

"IMAGE"

],

[

12,

3,

0,

8,

3,

"LATENT"

],

[

13,

5,

0,

8,

2,

"CONDITIONING"

],

[

16,

8,

0,

10,

0,

"LATENT"

],

[

17,

10,

0,

11,

0,

"IMAGE"

],

[

23,

5,

0,

14,

2,

"CONDITIONING"

],

[

25,

15,

0,

16,

0,

"IMAGE"

],

[

26,

14,

0,

15,

0,

"LATENT"

],

[

35,

3,

0,

14,

3,

"LATENT"

],

[

38,

9,

0,

8,

1,

"CONDITIONING"

],

[

39,

12,

0,

14,

1,

"CONDITIONING"

],

[

40,

19,

0,

20,

1,

"CONDITIONING"

],

[

42,

20,

0,

21,

0,

"LATENT"

],

[

43,

21,

0,

22,

0,

"IMAGE"

],

[

44,

1,

1,

23,

0,

"*"

],

[

46,

23,

0,

9,

0,

"CLIP"

],

[

47,

23,

0,

12,

0,

"CLIP"

],

[

49,

23,

0,

19,

0,

"CLIP"

],

[

51,

1,

0,

25,

0,

"*"

],

[

52,

25,

0,

3,

0,

"MODEL"

],

[

53,

25,

0,

8,

0,

"MODEL"

],

[

54,

25,

0,

14,

0,

"MODEL"

],

[

55,

25,

0,

20,

0,

"MODEL"

],

[

56,

3,

0,

20,

3,

"LATENT"

],

[

58,

1,

2,

26,

0,

"*"

],

[

59,

26,

0,

6,

1,

"VAE"

],

[

60,

26,

0,

10,

1,

"VAE"

],

[

61,

26,

0,

15,

1,

"VAE"

],

[

62,

26,

0,

21,

1,

"VAE"

],

[

63,

5,

0,

20,

2,

"CONDITIONING"

]

],

"groups": [],

"config": {},

"extra": {},

"version": 0.4

}