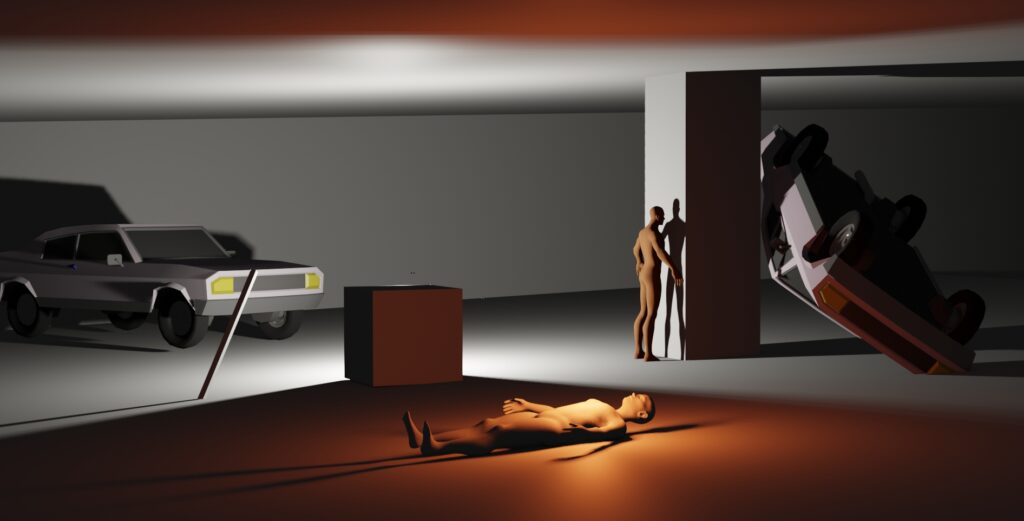

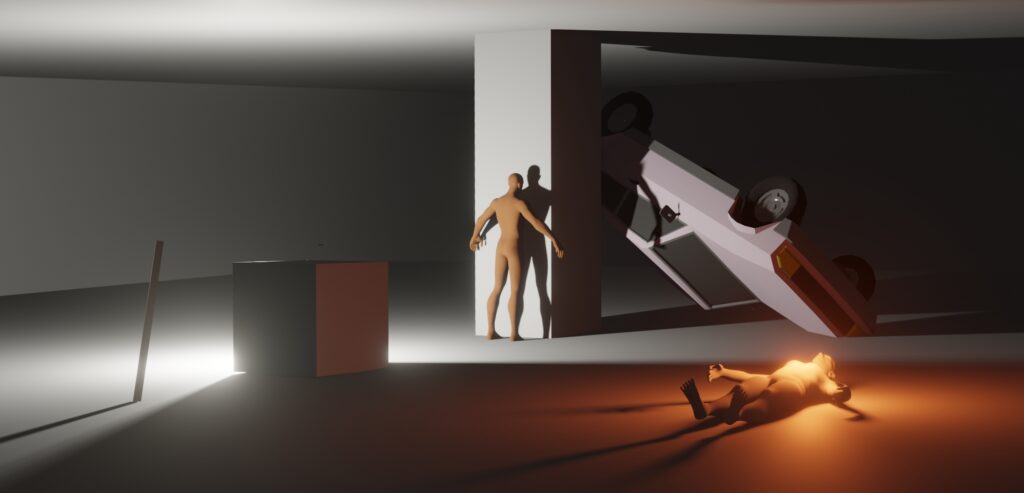

The creative work with generative diffusion models can be pretty challenging, when it comes to precise object positioning, camera perspective, body posed or specific lighting setup. As most workflows are mainly text based – or combined with advanded ControlNet based guidance – we are very limited with proper control of our output. To gain back that creative control, we can use basic 3D based geometries and lighting in combination with a suitable prompt, to guide the diffusion model in the right direction.

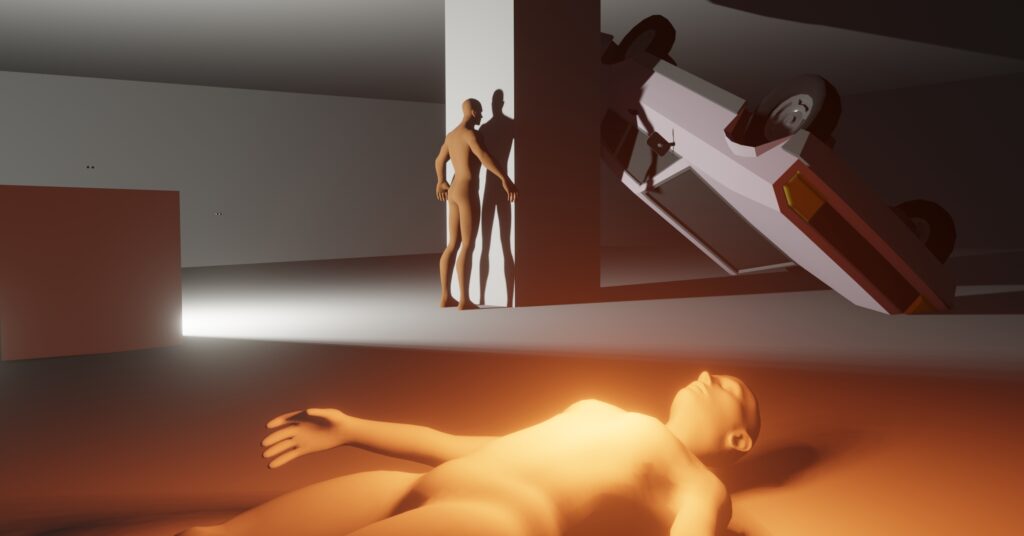

realistic analog photo of

an underground empty parking house in low light. white directional rim light and a red spot light.

a bald person in linen pants and white t-shirt is standing facing a concrete block. the person has a full headed knitted mask made from black and white yarn coverign the entire head. head points upwards.

a second bald person is lying flat on the floor in the foreground. lookign up to the ceiling.

there is two all white crashed car wrecks with white smoke coming out of the windows. one wreck is in the far left background. the second wreck is turned upside down on its roof placed on the far right side.

90s oversized used clothing.

the concrete floor is dirty, wet and weathered and with visible cracks.

there is a crooked metal pole sticking out of the floor to the left.

film grain. DOF. vignette.

DOWNLOAD WORKFLOW: Z-IMAGE_basic_i2i

scene rendering experiments

Creating a dummy 3D scene with proper lighting comes with the opportunity to change camera angles, lighting, positioning of objects pretty instantly. The diffusion model is moreover used as some kind of rendering and „interpretation“ engine of the draft to become a detailed, plausible output.

As we can change the perspective of out 3D draft camera, we can „wander“ through our scene from a draft perspective. This offers the freedom to find precise, meaningful perspective without cherrypicking random diffusion outputs.

Even though, the draft delivers pretty raw information, the diffusion model is capable interpet plausible light and lens behaviour.

drawbacks

The quality of the renderings is very dependent on the quality of the draft – there is always a compromise to make between quality and control.

The model is not flawless, so we need to deal with halucinations or false interpetations of the drafts.