motion feedback buffer & reaction diffusion Motion feedback generated from simple video camera input can be combined together with typical …

motion feedback buffer & reaction diffusion

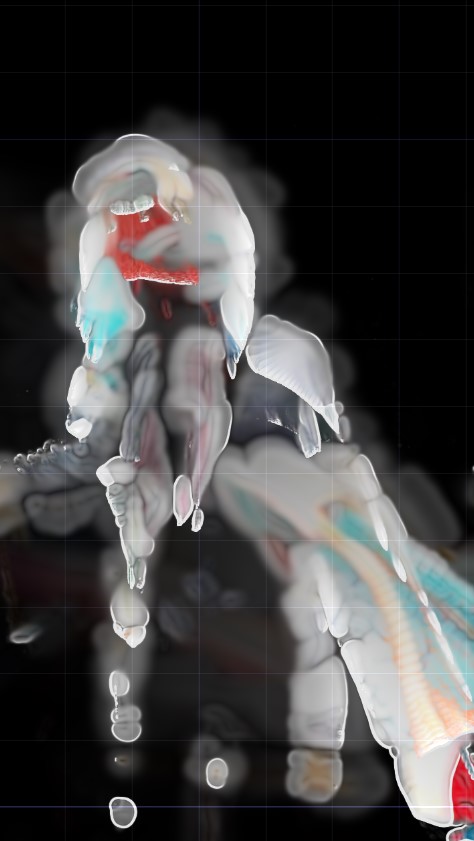

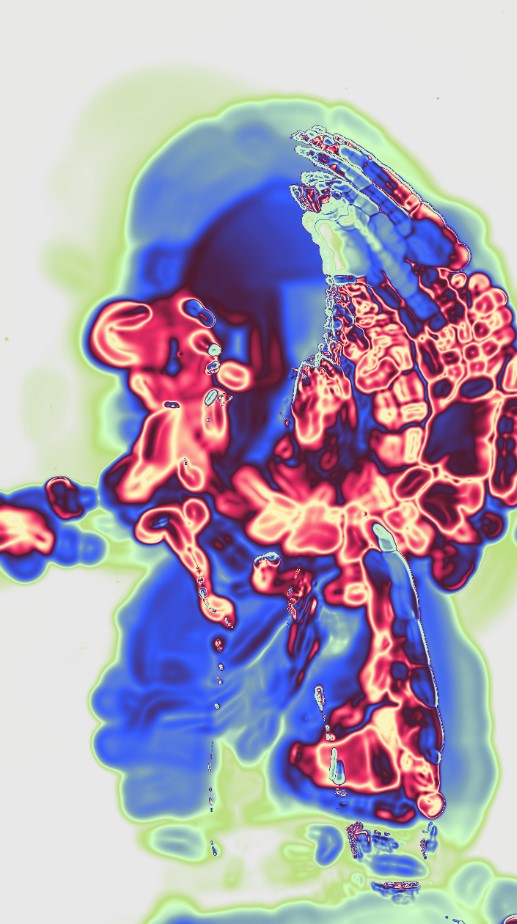

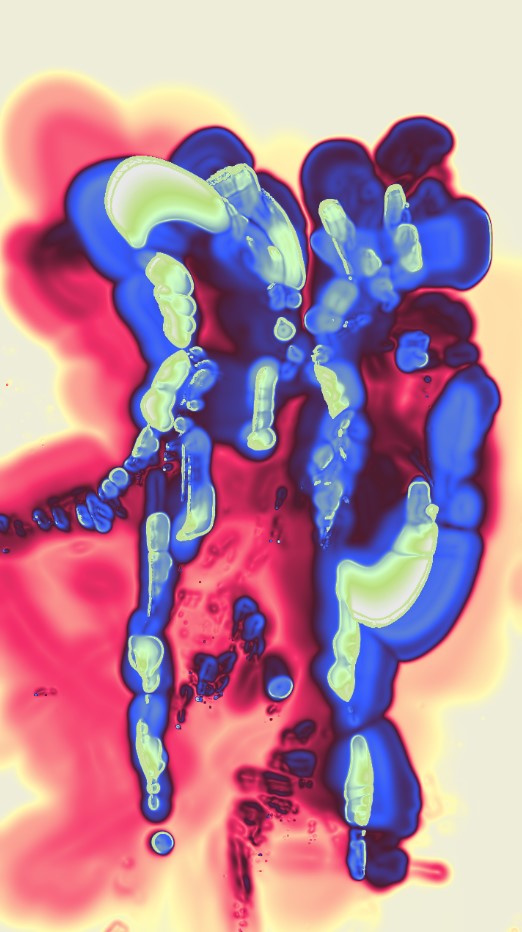

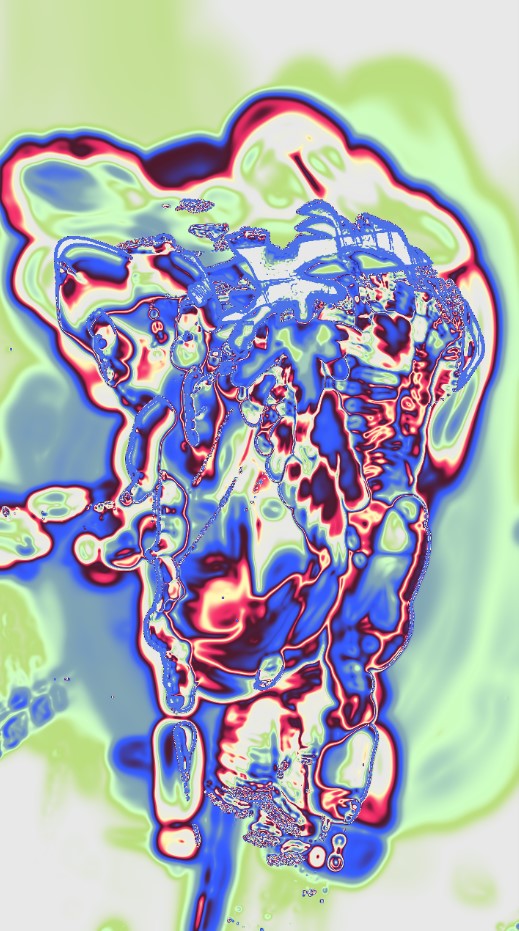

Motion feedback generated from simple video camera input can be combined together with typical digital visual simulations like reaction diffusion based on shader technology. In this sketch, the motion data is mixed with the reaction feedback buffer, then recorded and piped into Stable Diffusion API/img2img. With a pretty simple prompt setup, a wide range of materials and interpretations of the video footage can be archieved.

On top of that, each frame of this setup can be the source of a depthmap calculation. Therefore it is possible to generate 3D shapes from this plain 2D setup potentially. As already seen in current photogrammetry setups, machine learning algorithms might help to generate proper, consisten depth information to generate relevant, timebased geometry.

motion feedback buffer effects & reaction diffusion & ControlNet Depth

When taking simple motion tracking combined with shaderbased feedback loops, we can achieve quiet a lot of interessting visual effects. In combination with the Stable Diffusion img2img/ControlNet/Depth pipeline, this is quiet an exciting way to create shapes, figurines and patterns. Notice the interessting reation of the AI when fed with different feedback variations. Also note, that all sketches run with a common prompt: dynamic sculpture of dancer, realistic