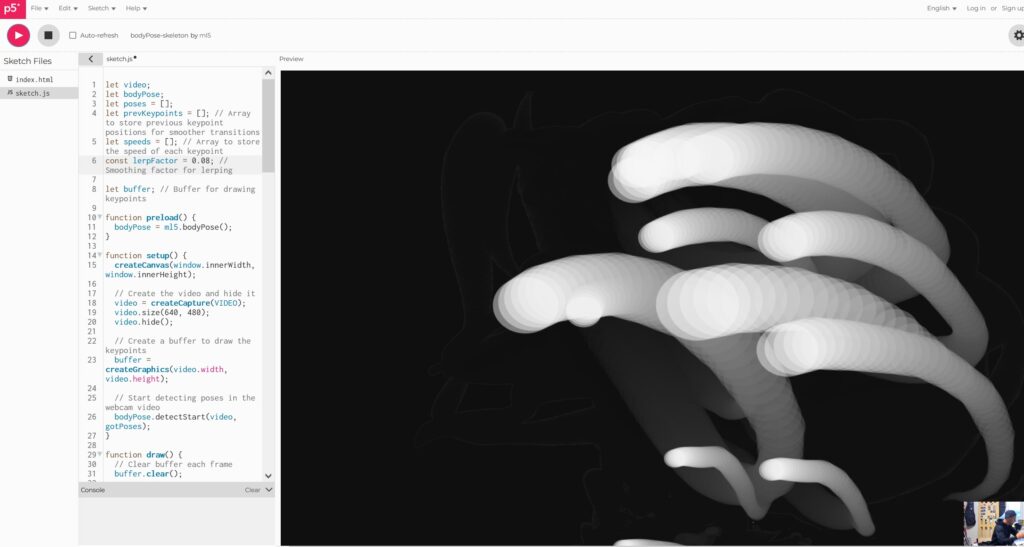

Design with body motion is a pretty abstract perspective on first sight. With simple web based body tracking tools based on the ml5 environment, we have two dimensional access to limb positions only by using a simple webcam. With latent and smoothed drawing these positions to a canvas, we are capable to generate DepthMaps we can use with current ControlNet immage diffusion AI. Body motion, speed and characteristics are translated to time coded maps, that can be the base of any kind of design.

realistic photo of medical device, bio inspired with white and black translucent dirty and used material , fungii lamellae, (white plain void background:1.2), depth of field

<html>

<head>

<meta charset="UTF-8" />

<title>ml5.js bodyPose Skeleton Example</title>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/1.9.4/p5.min.js"></script>

<script src="https://unpkg.com/ml5@1/dist/ml5.min.js"></script>

</head>

<body>

<script src="sketch.js"></script>

</body>

</html>

let video;

let bodyPose;

let poses = [];

let prevKeypoints = []; // Array to store previous keypoint positions for smoother transitions

let speeds = []; // Array to store the speed of each keypoint

const lerpFactor = 0.08; // Smoothing factor for lerping

let buffer; // Buffer for drawing keypoints

function preload() {

bodyPose = ml5.bodyPose();

}

function setup() {

createCanvas(window.innerWidth, window.innerHeight);

// Create the video and hide it

video = createCapture(VIDEO);

video.size(640, 480);

video.hide();

// Create a buffer to draw the keypoints

buffer = createGraphics(video.width, video.height);

// Start detecting poses in the webcam video

bodyPose.detectStart(video, gotPoses);

}

function draw() {

// Clear buffer each frame

buffer.clear();

// Calculate scaling factors to match video dimensions to canvas dimensions

let xScale = width / video.width;

let yScale = height / video.height;

for (let i = 0; i < poses.length; i++) {

let pose = poses[i];

for (let j = 0; j < pose.keypoints.length; j++) {

let keypoint = pose.keypoints[j];

// Check if the keypoint is confidently tracked

if (keypoint.confidence > 0.1) {

// Initialize previous keypoints and speeds if empty

if (!prevKeypoints[i]) {

prevKeypoints[i] = [];

speeds[i] = [];

}

// Mirror the x-axis by adjusting the x-coordinate, scaling for canvas size

let mirroredX = video.width - keypoint.x;

let scaledX = mirroredX * xScale;

let scaledY = keypoint.y * yScale;

// If the keypoint was untracked, snap to the new position

if (!prevKeypoints[i][j]) {

prevKeypoints[i][j] = { x: scaledX, y: scaledY };

speeds[i][j] = 0; // Reset speed to 0

}

// Get previous position

let prev = prevKeypoints[i][j];

// Calculate motion speed (distance between current and previous positions)

let dx = scaledX - prev.x;

let dy = scaledY - prev.y;

let speed = sqrt(dx * dx + dy * dy);

// Smooth the speed value

speeds[i][j] = lerp(speeds[i][j], speed * 0.33, lerpFactor);

// Scale the circle size based on speed (min 2, max 40)

let size = map(speeds[i][j], 0, 10, 2, 20);

// Draw the circle for this keypoint on the buffer

buffer.fill(255, 120);

buffer.noStroke();

buffer.circle(prev.x / xScale, prev.y / yScale, size / xScale); // Adjusted for buffer coordinates

// Update previous keypoint position for the next frame with lerping

prevKeypoints[i][j] = {

x: lerp(prev.x, scaledX, lerpFactor),

y: lerp(prev.y, scaledY, lerpFactor)

};

} else {

// Reset the previous keypoint and speed if it’s no longer tracked

if (prevKeypoints[i] && prevKeypoints[i][j]) {

prevKeypoints[i][j] = null;

speeds[i][j] = 0; // Reset speed to 0

}

}

}

}

// Draw a fading effect on the buffer to create a trail

buffer.fill(0, 8);

buffer.noStroke();

buffer.rect(0, 0, buffer.width, buffer.height);

// Draw the buffer to the main canvas, scaled up to match canvas size

image(buffer, 0, 0, width, height);

// Draw the video feed in the lower right corner

image(video, width - 160, height - 120, 160, 120);

}

// Callback function for when bodyPose outputs data

function gotPoses(results) {

poses = results;

}