Working with layouts and typography based designs is quiet challenging with the current tools available. As typography comes with very …

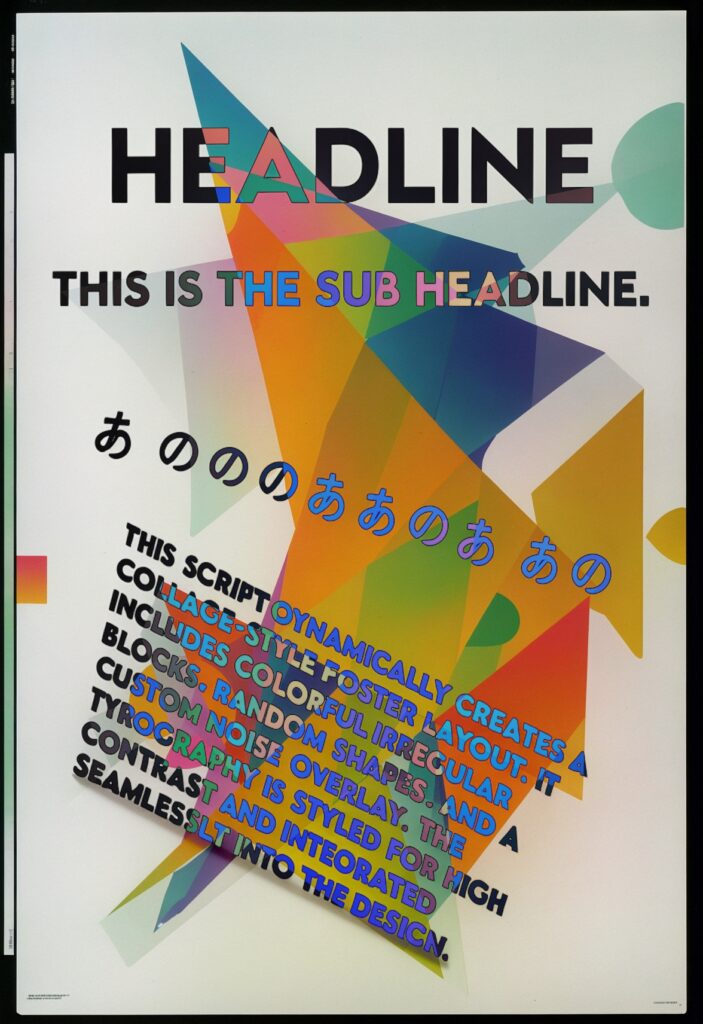

Working with layouts and typography based designs is quiet challenging with the current tools available. As typography comes with very strict visual formulars for the shapes itself, visual consistency and spacing – we struggle with current diffusion methods we have with current models like Stable Diffusion or FLUX. With additional control tools, we are capable to guide the diffusion process somehow but mostly with as loss in image quality.

method I: splitting image layer from text layer

By splitting the

The typographic layer

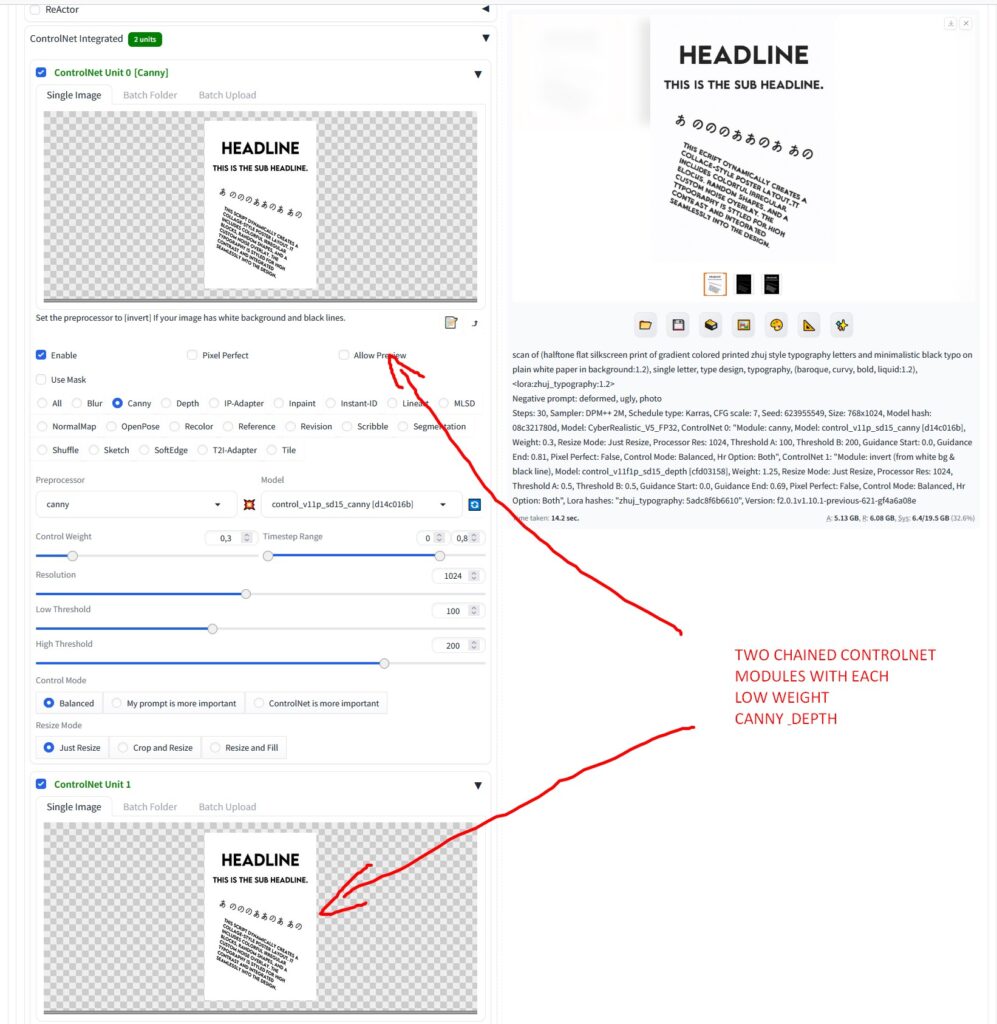

By using Stable Diffusion 1.5 and ControlNet Depth and/or Canny, we can force the diffusion to pretty tangible results. With a simple black and white typographic offset image, we can use the diffusion process to overwork this plan digital preset with a proper prompt to a more stylized result.

Additionally to the ControlNet guiding instruments, it is helpful to induce a specific type related LoRa to the render process. Felix Dölker did a greate job training a specific LoRa for that purpose. Visit the project or get the LoRa directly from civitAI.

To bring this all together in a quick workflow, you can use Forge/A1111 with its built-in ControlNET modules.

The result is a readable output, but it is quiet far away from activley designing with generative diffusion models. :/