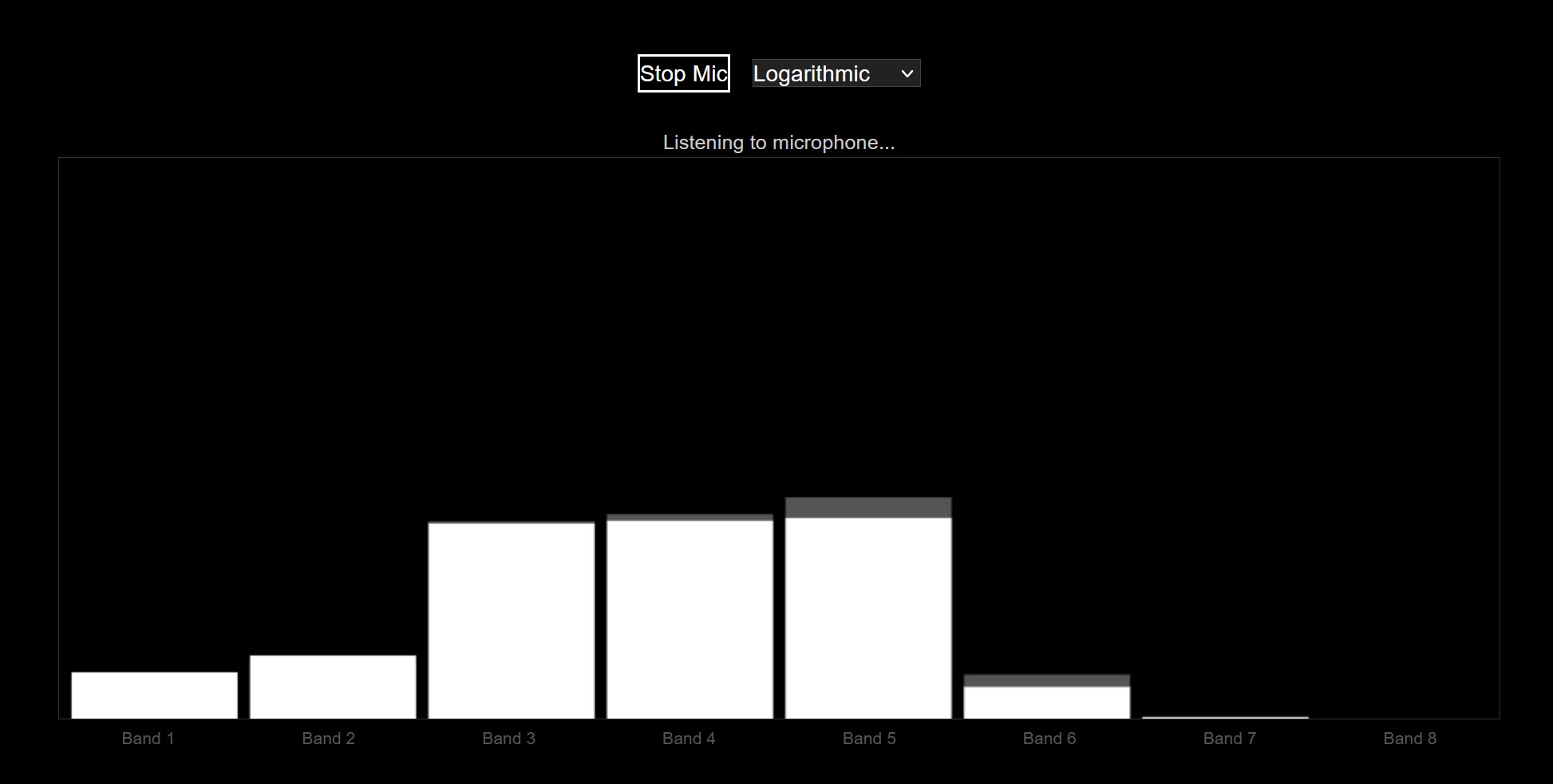

basic realtime sound analytics tools

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Real-time Audio Analyzer</title>

<script src="https://cdn.tailwindcss.com"></script>

<style>

body {

font-family: 'Inter', sans-serif;

background-color: #000;

color: #fff;

display: flex;

justify-content: center;

align-items: center;

min-height: 100vh;

flex-direction: column;

box-sizing: border-box;

padding: 1rem;

}

.container {

width: 100%;

max-width: 800px;

display: flex;

flex-direction: column;

align-items: center;

}

button {

padding: 0.75rem 2rem;

font-size: 1rem;

font-weight: 600;

background-color: #fff;

color: #000;

border: 2px solid #fff;

transition: all 0.3s ease;

cursor: pointer;

margin-bottom: 1rem;

border-radius: 0;

}

button:hover {

background-color: #222;

color: #fff;

}

canvas {

width: 100%;

height: 400px;

background-color: #000;

border: 1px solid #333;

touch-action: none;

}

.status-message {

margin-top: 0.5rem;

font-size: 0.9rem;

color: #ccc;

text-align: center;

}

.band-labels {

display: flex;

justify-content: space-between;

width: 100%;

margin-top: 0.25rem;

}

.band-labels span {

font-size: 0.75rem;

color: #555;

text-align: center;

flex-grow: 1;

}

.controls {

display: flex;

align-items: center;

gap: 1rem;

margin-bottom: 1rem;

}

select {

background-color: #222;

color: #fff;

border: 1px solid #444;

padding: 0.25rem 0.5rem;

border-radius: 0;

}

</style>

</head>

<body class="bg-black text-white">

<div class="container">

<div class="controls">

<button id="mic-button">Start Mic</button>

<select id="band-mode">

<option value="logarithmic">Logarithmic</option>

<option value="octave">Octave Bands</option>

<option value="critical">Critical Bands</option>

</select>

</div>

<span id="status-message" class="status-message">Click 'Start Mic' to begin.</span>

<canvas id="visualizer"></canvas>

<div class="band-labels">

<span>Band 1</span>

<span>Band 2</span>

<span>Band 3</span>

<span>Band 4</span>

<span>Band 5</span>

<span>Band 6</span>

<span>Band 7</span>

<span>Band 8</span>

</div>

</div>

<script>

// --- Global Variables and Setup ---

const canvas = document.getElementById('visualizer');

const ctx = canvas.getContext('2d');

const micButton = document.getElementById('mic-button');

const statusMessage = document.getElementById('status-message');

const bandModeSelect = document.getElementById('band-mode');

const bandLabels = document.querySelector('.band-labels');

// Audio API variables

let audioContext;

let analyser;

let source;

let isRunning = false;

// FFT and visualization variables

const FFT_SIZE = 8192;

const NUM_BANDS = 8;

let dataArray = new Uint8Array(FFT_SIZE / 2);

let rawBandData = new Array(NUM_BANDS).fill(0);

let smoothedBandData = new Array(NUM_BANDS).fill(0);

const SMOOTHING_FACTOR = 0.2;

let animationFrameId;

// Band label configurations for different modes

const bandLabelConfigs = {

'logarithmic': ['Band 1', 'Band 2', 'Band 3', 'Band 4', 'Band 5', 'Band 6', 'Band 7', 'Band 8'],

'octave': ['31Hz', '63Hz', '125Hz', '250Hz', '500Hz', '1kHz', '2kHz', '4kHz'],

'critical': ['<100Hz', '100-200Hz', '200-400Hz', '400-750Hz', '750-1250Hz', '1.25-2kHz', '2-3.5kHz', '>3.5kHz']

};

// --- Core Functions ---

/**

* Initializes the audio context and gets the microphone stream.

*/

async function setupAudio() {

try {

const stream = await navigator.mediaDevices.getUserMedia({ audio: true });

audioContext = new (window.AudioContext || window.webkitAudioContext)();

source = audioContext.createMediaStreamSource(stream);

analyser = audioContext.createAnalyser();

analyser.fftSize = FFT_SIZE;

analyser.minDecibels = -90;

analyser.maxDecibels = -10;

analyser.smoothingTimeConstant = 0.85;

// Connect the audio graph: source -> analyser.

// We do NOT connect the analyser to the destination to prevent audio feedback.

source.connect(analyser);

isRunning = true;

micButton.textContent = 'Stop Mic';

statusMessage.textContent = 'Listening to microphone...';

resizeCanvas();

window.addEventListener('resize', resizeCanvas);

animationLoop();

} catch (err) {

console.error('Error accessing microphone:', err);

statusMessage.textContent = 'Error: Could not access microphone.';

}

}

/**

* Stops the audio processing and visualization.

*/

function stopAudio() {

if (audioContext) {

audioContext.close();

audioContext = null;

}

if (animationFrameId) {

cancelAnimationFrame(animationFrameId);

}

isRunning = false;

micButton.textContent = 'Start Mic';

statusMessage.textContent = 'Click \'Start Mic\' to begin.';

ctx.clearRect(0, 0, canvas.width, canvas.height);

}

/**

* Main animation and processing loop.

*/

function animationLoop() {

if (!isRunning) return;

analyser.getByteFrequencyData(dataArray);

const mode = bandModeSelect.value;

let newBandData;

// Select the appropriate band calculation function

switch (mode) {

case 'logarithmic':

newBandData = calculateLogBands(dataArray);

break;

case 'octave':

newBandData = calculateOctaveBands(dataArray);

break;

case 'critical':

newBandData = calculateCriticalBands(dataArray);

break;

default:

newBandData = calculateLogBands(dataArray);

}

rawBandData = newBandData;

smoothedBandData = applySmoothing(newBandData);

drawVisualization();

animationFrameId = requestAnimationFrame(animationLoop);

}

/**

* Calculates 8 bands with equal logarithmic spacing (20Hz - 20000Hz).

*/

function calculateLogBands(fftData) {

const bands = new Array(NUM_BANDS).fill(0);

const sampleRate = audioContext.sampleRate;

const fftBinSize = sampleRate / analyser.fftSize;

const logMin = Math.log(20);

const logMax = Math.log(20000);

const logStep = (logMax - logMin) / NUM_BANDS;

for (let i = 0; i < NUM_BANDS; i++) {

const startFreq = Math.exp(logMin + i * logStep);

const endFreq = Math.exp(logMin + (i + 1) * logStep);

const startBin = Math.floor(startFreq / fftBinSize);

const endBin = Math.ceil(endFreq / fftBinSize);

let sum = 0;

let count = 0;

for (let j = startBin; j < endBin && j < fftData.length; j++) {

sum += fftData[j];

count++;

}

bands[i] = count > 0 ? sum / count : 0;

}

return bands;

}

/**

* Calculates bands based on standard octave center frequencies.

*/

function calculateOctaveBands(fftData) {

const bands = new Array(NUM_BANDS).fill(0);

const sampleRate = audioContext.sampleRate;

const fftBinSize = sampleRate / analyser.fftSize;

// Standard octave band center frequencies (Hz)

const centerFreqs = [31.5, 63, 125, 250, 500, 1000, 2000, 4000];

for (let i = 0; i < NUM_BANDS; i++) {

// Calculate band edges (half-octave on either side)

const startFreq = centerFreqs[i] / Math.sqrt(2);

const endFreq = centerFreqs[i] * Math.sqrt(2);

const startBin = Math.floor(startFreq / fftBinSize);

const endBin = Math.ceil(endFreq / fftBinSize);

let sum = 0;

let count = 0;

for (let j = startBin; j < endBin && j < fftData.length; j++) {

sum += fftData[j];

count++;

}

bands[i] = count > 0 ? sum / count : 0;

}

return bands;

}

/**

* Calculates bands based on the critical bands (Bark scale), which model human hearing.

* This is a simplified, approximate implementation.

*/

function calculateCriticalBands(fftData) {

const bands = new Array(NUM_BANDS).fill(0);

const sampleRate = audioContext.sampleRate;

const fftBinSize = sampleRate / analyser.fftSize;

// Simplified critical band boundaries in Hz

const bandEdges = [0, 100, 200, 400, 750, 1250, 2000, 3500, 20000];

for (let i = 0; i < NUM_BANDS; i++) {

const startFreq = bandEdges[i];

const endFreq = bandEdges[i + 1];

const startBin = Math.floor(startFreq / fftBinSize);

const endBin = Math.ceil(endFreq / fftBinSize);

let sum = 0;

let count = 0;

for (let j = startBin; j < endBin && j < fftData.length; j++) {

sum += fftData[j];

count++;

}

bands[i] = count > 0 ? sum / count : 0;

}

return bands;

}

/**

* Applies an Exponential Moving Average (EMA) to smooth the band data.

*/

function applySmoothing(newBands) {

const smoothed = new Array(NUM_BANDS);

for (let i = 0; i < NUM_BANDS; i++) {

smoothed[i] = smoothedBandData[i] * (1 - SMOOTHING_FACTOR) + newBands[i] * SMOOTHING_FACTOR;

}

return smoothed;

}

/**

* Draws the raw and smoothed band data on the canvas.

*/

function drawVisualization() {

ctx.clearRect(0, 0, canvas.width, canvas.height);

const barWidth = (canvas.width / NUM_BANDS) - 10;

const barSpacing = (canvas.width - (barWidth * NUM_BANDS)) / (NUM_BANDS + 1);

for (let i = 0; i < NUM_BANDS; i++) {

const x = barSpacing + (barSpacing + barWidth) * i;

// Draw the raw band data bar (solid, darker gray)

const rawBarHeight = (rawBandData[i] / 255) * canvas.height;

ctx.fillStyle = '#555';

ctx.fillRect(x, canvas.height - rawBarHeight, barWidth, rawBarHeight);

// Draw the smoothed band data bar (solid, white)

const smoothedBarHeight = (smoothedBandData[i] / 255) * canvas.height;

ctx.fillStyle = '#fff';

ctx.fillRect(x, canvas.height - smoothedBarHeight, barWidth, smoothedBarHeight);

}

}

/**

* Resizes the canvas to fit its container on window resize.

*/

function resizeCanvas() {

canvas.width = canvas.offsetWidth;

canvas.height = canvas.offsetHeight;

}

/**

* Updates the band labels based on the selected mode.

*/

function updateBandLabels() {

const mode = bandModeSelect.value;

const labels = bandLabelConfigs[mode];

bandLabels.innerHTML = labels.map(label => `<span>${label}</span>`).join('');

}

// --- Event Listeners ---

micButton.addEventListener('click', () => {

if (isRunning) {

stopAudio();

} else {

setupAudio();

}

});

bandModeSelect.addEventListener('change', updateBandLabels);

// Initial label setup

updateBandLabels();

</script>

</body>

</html>