—————————– Ähnliche Beiträge: Keine ähnlichen Artikel gefunden.

// Import necessary libraries

import gab.opencv.*;

import processing.video.*;

import java.awt.*;

// Declare global variables for video capture and OpenCV

Capture video;

OpenCV opencv;

// Variables for face tracking

float tx, ty, ts; // Target x, y, and size

float sx, sy, ss; // Smoothed x, y, and size

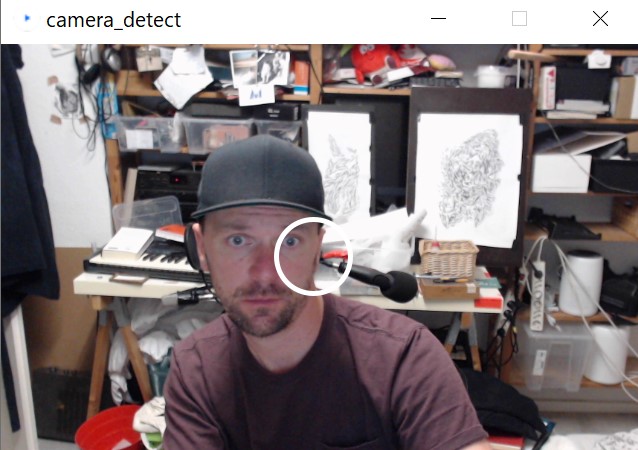

void setup() {

// Set up the canvas size

size(640, 480);

// Initialize the video capture with the specified pipeline

video = new Capture(this, 640, 480);

// Initialize OpenCV with the same dimensions as the canvas

opencv = new OpenCV(this, 640, 480);

// Load the frontal face cascade classifier

opencv.loadCascade(OpenCV.CASCADE_FRONTALFACE);

// Start the video capture

video.start();

}

void draw() {

// Load the current frame from the video into OpenCV

opencv.loadImage(video);

// Display the video frame on the canvas

image(video, 0, 0);

// Detect faces in the current frame

Rectangle[] faces = opencv.detect();

// If at least one face is detected

if (faces.length > 0) {

// If the detected face is wider than 40 pixels

if (faces[0].width > 40) {

// Set target values to the center of the detected face and size based on face dimensions

tx = faces[0].x + faces[0].width / 2;

ty = faces[0].y + faces[0].height / 2;

ts = (faces[0].width + faces[0].height) * 0.3;

}

}

// Smoothly interpolate current position and size to the target values

sx = lerp(sx, tx, 0.1);

sy = lerp(sy, ty, 0.1);

ss = lerp(ss, ts, 0.1);

// Draw a circle around the face

noFill();

stroke(255); // White color

strokeWeight(5); // Thickness of the circle

circle(sx, sy, ss); // Draw the circle at smoothed coordinates with smoothed size

}

// Event handler for video capture

void captureEvent(Capture c) {

c.read(); // Read the new frame from the capture

}

// Import necessary libraries

import gab.opencv.*;

import processing.video.*;

import java.awt.*;

Capture video;

OpenCV opencv;

// Variables to store previous frame for motion detection

PImage prevFrame;

// Variable to store the amount of motion

float motionAmount = 0;

float smoothedMotionAmount = 0;

void setup() {

// Set up the canvas size

size(640, 480);

// Initialize the video capture

video = new Capture(this, 640, 480);

// Initialize OpenCV with the same dimensions as the canvas

opencv = new OpenCV(this, 640, 480);

// Start the video capture

video.start();

}

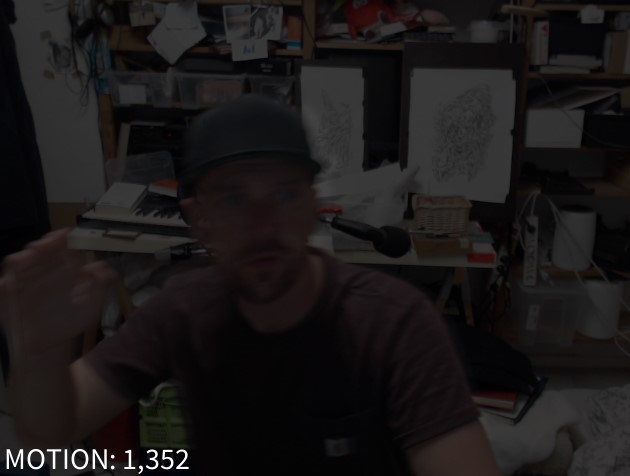

void draw() {

// Load the current frame from the video

if (video.available()) {

video.read();

opencv.loadImage(video);

}

// Display the video frame on the canvas

image(video, 0, 0);

// Check if there is a previous frame

if (prevFrame != null) {

// Convert current frame and previous frame to grayscale

PImage currFrameGray = createImage(video.width, video.height, RGB);

PImage prevFrameGray = createImage(video.width, video.height, RGB);

currFrameGray.copy(video, 0, 0, video.width, video.height, 0, 0, video.width, video.height);

prevFrameGray.copy(prevFrame, 0, 0, prevFrame.width, prevFrame.height, 0, 0, prevFrame.width, prevFrame.height);

currFrameGray.filter(GRAY);

prevFrameGray.filter(GRAY);

// Compute the absolute difference between the current frame and previous frame

PImage diffFrame = createImage(video.width, video.height, RGB);

diffFrame.loadPixels();

currFrameGray.loadPixels();

prevFrameGray.loadPixels();

int motionPixelCount = 0;

for (int i = 0; i < diffFrame.pixels.length; i++) {

int diff = int(abs(brightness(currFrameGray.pixels[i]) - brightness(prevFrameGray.pixels[i])));

diffFrame.pixels[i] = color(diff);

if (diff > 30) { // Threshold to consider significant motion

motionPixelCount++;

}

}

diffFrame.updatePixels();

// Calculate the motion amount as the percentage of pixels with significant changes

motionAmount = (float)motionPixelCount / diffFrame.pixels.length;

// Smooth the motion amount using lerp

smoothedMotionAmount = lerp(smoothedMotionAmount, motionAmount*100, 0.1);

// Draw the difference image as an overlay on the original video

tint(255, 180); // Set transparency

image(diffFrame, 0, 0);

// Display the smoothed motion amount

fill(255);

textSize(30);

text("MOTION: " + nf(smoothedMotionAmount, 1, 3), 10, height - 10);

}

// Save the current frame as the previous frame for the next iteration

prevFrame = createImage(video.width, video.height, RGB);

prevFrame.copy(video, 0, 0, video.width, video.height, 0, 0, video.width, video.height);

}

// Event handler for video capture

void captureEvent(Capture c) {

c.read(); // Read the new frame from the capture

}

—————————–

// Import necessary libraries

import gab.opencv.*;

import processing.video.*;

import java.awt.*;

// Declare global variables for video capture and OpenCV

Capture video;

OpenCV opencv;

// Variables for face tracking

float tx, ty, ts; // Target x, y, and size

float sx, sy, ss; // Smoothed x, y, and size

void setup() {

// Set up the canvas size

size(640, 480);

// Initialize the video capture with the specified pipeline

video = new Capture(this, 640, 480);

// Initialize OpenCV with the same dimensions as the canvas

opencv = new OpenCV(this, 640, 480);

// Load the frontal face cascade classifier

opencv.loadCascade(OpenCV.CASCADE_FRONTALFACE);

// Start the video capture

video.start();

}

void draw() {

// Load the current frame from the video into OpenCV

opencv.loadImage(video);

// Display the video frame on the canvas

image(video, 0, 0);

// Detect faces in the current frame

Rectangle[] faces = opencv.detect();

// If at least one face is detected

if (faces.length > 1) {

int f1x= faces[0].x;

int f1y= faces[0].y;

int f2x= faces[1].x;

int f2y= faces[1].y;

tx = (f1x + f2x)*.5;

ty = (f1y + f2y)*.5;

circle(f1x, f2y, 22);

}

sx = lerp(sx,tx,0.1);

sy = lerp(sy,ty,0.1);

// Draw a circle around the face

noFill();

stroke(255); // White color

strokeWeight(5); // Thickness of the circle

circle(sx, sy, ss); // Draw the circle at smoothed coordinates with smoothed size

}

// Event handler for video capture

void captureEvent(Capture c) {

c.read(); // Read the new frame from the capture

}