TouchDesigner API TouchDesigner comes handy as a quick prototype engine when working with Generative Diffusion, as it natively deals with …

TouchDesigner API

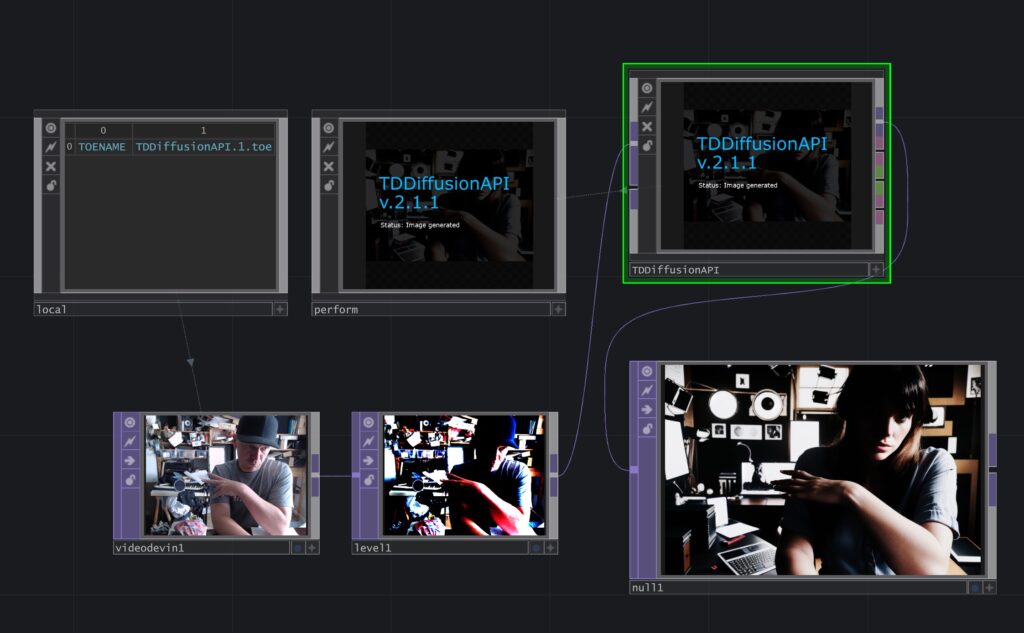

TouchDesigner comes handy as a quick prototype engine when working with Generative Diffusion, as it natively deals with 2D textures, it’s visual node based programming UI and nice community work. The API to your locally installed Diffusion system works easiest with the use of custom plugins provided by the community.

TouchDesigner <> A1111 / ForgeUI

This plugin comes right functional out of the box. To work properly, it simply needs a locally running instance of A1111 or ForgeUI on popular ports. After initialization, you can call the obvious txt2img or img2img workflows. Check out the plugins github page by olegchomp for proper updates and downloads of the plugin. TDDiffusionAPI

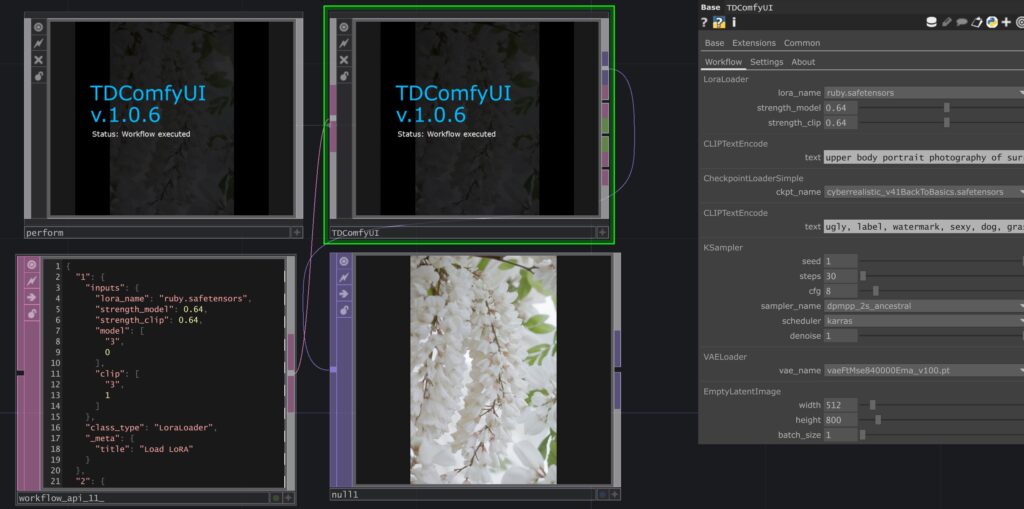

TouchDesigner <> ComfyUI

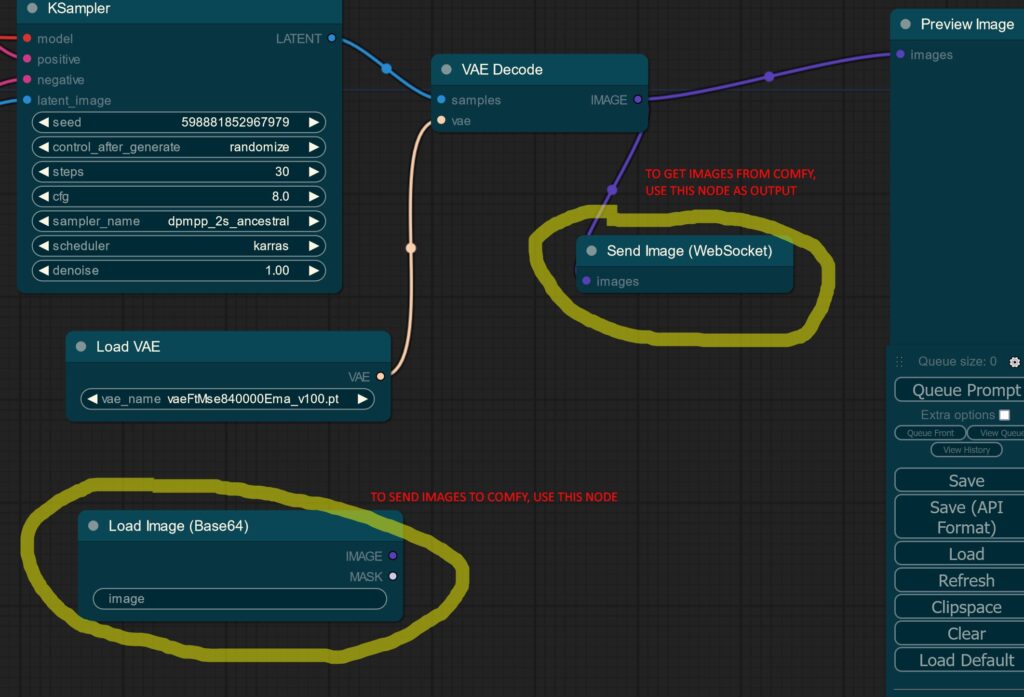

The author olegchomp also provides us a simple interface to ComfyUI for us – props! – This plugin differs from the one above, as the workflow needs to be implemented in the TouchDesigner project itself. Check out for updates and current download at his github page: TDComfyUI.

To make it work, just create a workflow in the default comfyUI environment and export it in the API version as *.json. Just throw this file into your TouchDesigner project and connect it to your ComfyUIAPI node. Keep in mind, that the output node in ComfyUI needs to be a WebSocket node to send data from Comfy to TD.

Unity API

As Unity is first off a game engine for 3D content, it lacks proper community support for nice plugins. The communication between the local Diffusion systems needs to be done by hand – means code here.

Unity <> A1111 / ForgeUI

The interface comes with two scripts. The ai_controller.cs does the main calls to the local SD API and processes the callback data. This base64 image data is then processed back to a visible texture (sd_return_tex ) . The second script helps you to give processing feedback to the user, as it calls the SD API for current state and makes it available in the Unity environment.

using System.Collections;

using UnityEngine;

using UnityEngine.Networking;

using System.Text;

using System;

using System.IO;

public class ai_controller : MonoBehaviour

{

public string apiEndpoint_http = "http://127.0.0.1:7860"; // Replace with your API endpoint

private UnityWebRequest request; // Define the request variable here

public Texture2D sd_return_tex;

public static bool is_calling_sd;

byte[] rawbytes;

int w = 600;

int h = 300;

// parse base from IMAGE Tex2D

string base64Img = "null";

public static float main_denoise_factor = 0.3f;

public void CALL_SD_TXT_2_IMG(){

RequestData requestData = new RequestData();

//requestData.init_images[0] = base64Img;

requestData.width = w;

requestData.height = h;

//requestData.denoising_strength = main_denoise_factor;

requestData.prompt = "landscape"; //prompt_controller.full_positive_prompt;

requestData.negative_prompt = "illustration ugly"; //prompt_controller.full_negative_prompt;

string jsonData = JsonUtility.ToJson(requestData);

is_calling_sd = true;

// http://127.0.0.1:7860/sdapi/v1/txt2img

StartCoroutine(PostRequest(apiEndpoint_http+"/sdapi/v1/txt2img", jsonData));

}

private IEnumerator PostRequest(string url, string json)

{

request = new UnityWebRequest(url, "POST"); // Initialize the request here

request.timeout = 60; // set timeout to 60s

byte[] bodyRaw = System.Text.Encoding.UTF8.GetBytes(json);

request.uploadHandler = (UploadHandler)new UploadHandlerRaw(bodyRaw);

request.downloadHandler = (DownloadHandler)new DownloadHandlerBuffer();

request.SetRequestHeader("Content-Type", "application/json");

// Start the request

var operation = request.SendWebRequest();

while (!operation.isDone)

{

//Debug.Log("well");

yield return null;

}

if (request.result == UnityWebRequest.Result.Success)

{

aRESPONSE respi = JsonUtility.FromJson<aRESPONSE>(request.downloadHandler.text);

rawbytes = Convert.FromBase64String(respi.images[0]);

sd_return_tex = new Texture2D(w, h);

sd_return_tex.LoadImage(rawbytes);

sd_return_tex.Apply();

}else

{

Debug.LogError("Request failed: " + request.error);

}

is_calling_sd = false;

}

}

// ###########################################

[System.Serializable]

public class RequestData

{

public string prompt = "person beautiful";

public string negative_prompt = "null";

// public string[] styles = { "string" };

public int seed = -1;

/*

public int subseed = -1;

public int subseed_strength = 0;

public int seed_resize_from_h = -1;

public int seed_resize_from_w = -1;

*/

public string sampler_name = "Euler a";

// public string sampler_name = "DPM++ 2M";

public int batch_size = 1;

public int steps = 30;

public int cfg_scale = 8;

public int width = 512;

public int height = 512;

public bool restore_faces = true;

public bool tiling = false;

// public bool do_not_save_samples = false;

// public bool do_not_save_grid = false;

// public float eta = 0;

public float denoising_strength = 0.6f;

public string[] init_images = { "string" };

/*

public float s_min_uncond = 0;

public float s_churn = 0;

public float s_tmax = 0;

public float s_tmin = 0;

public float s_noise = 0;

public bool override_settings_restore_afterwards = true;

public string refiner_checkpoint = "string";

public int refiner_switch_at = 0;

public bool disable_extra_networks = false;

public string hr_upscaler = "string";

public int hr_second_pass_steps = 0;

public int hr_resize_x = 0;

public int hr_resize_y = 0;

public string hr_checkpoint_name = "string";

public string hr_sampler_name = "string";

public string hr_prompt = "";

public string hr_negative_prompt = "";

public string sampler_index = "Euler a";

// public string script_name = "string";

//public string[] script_args = { };

*/

public bool send_images = true;

public bool save_images = false;

// public AlwaysonScripts alwayson_scripts = new AlwaysonScripts();

}

[System.Serializable]

public class aRESPONSE

{

public string[] images;

public string info;

/*

public aRESPONSE()

{

}

*/

}