using the webcam as a sensor

low res facetted webcam feed

using the webcam as a low res facetted brightness input device

let gsx = 32;

let gsy = 24;

let xscl = 1;

let yscl = 1;

let video;

function setup() {

createCanvas(window.innerWidth, window.innerHeight);

xscl = width/gsx;

yscl = height/gsy;

video = createCapture(VIDEO);

video.size(gsx,gsy);

video.hide();

noSmooth();

}

function draw(){

background(222);

video.loadPixels();

noStroke();

for( let x=0; x< gsx; x++){

for( let y=0; y< gsy; y++){

var index = (video.width - x -1 + (y * video.width)) * 4;

var r = video.pixels[index + 0];

var g = video.pixels[index + 1];

var b = video.pixels[index + 2];

var bri = (r + g + b) / 3;

let xpos = x*xscl;

let ypos = y*yscl;

fill(bri);

rect(xpos,ypos, xscl, yscl);

}

}

}

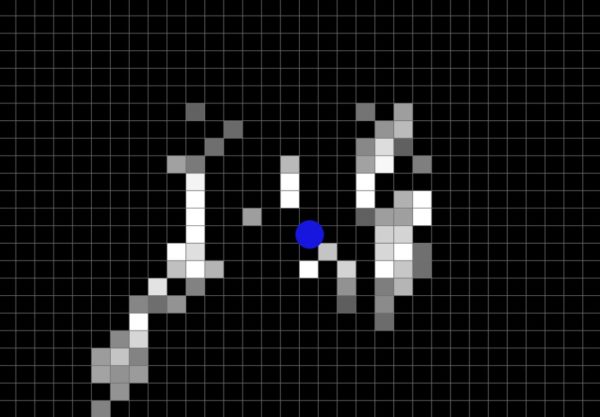

smooth blob detection

pixel difference operation – aka low tech motion detection with a smoothed centered blob

let gsx = 32;

let gsy = 24;

let xscl = 1;

let yscl = 1;

let video;

let pmtx = [];

// motion detection vars

let motion_threshold = 30;

let motion_center_x = 0;

let motion_center_y = 0;

let target_x = 0;

let target_y = 0;

let smooth_x = 0;

let smooth_y = 0;

function setup() {

createCanvas(window.innerWidth, window.innerHeight);

xscl = width / gsx;

yscl = height / gsy;

video = createCapture(VIDEO);

video.size(gsx, gsy);

video.hide();

noSmooth();

}

function draw() {

let thres_ticker = 0; // ticker for square sum of diff pixels

motion_center_x = 0; // reset center

motion_center_y = 0;

background(111);

video.loadPixels();

noStroke();

for (let x = 0; x < gsx; x++) {

for (let y = 0; y < gsy; y++) {

var index = (video.width - x - 1 + y * video.width) * 4;

var r = video.pixels[index + 0];

var g = video.pixels[index + 1];

var b = video.pixels[index + 2];

var bri = (r + g + b) / 3;

let xpos = x * xscl;

let ypos = y * yscl;

let diff = pmtx[index] - bri;

if (diff > motion_threshold) {

fill(diff * 3);

motion_center_x += xpos;

motion_center_y += ypos;

thres_ticker++;

} else {

fill(0);

}

rect(xpos, ypos, xscl - 1, yscl - 1);

pmtx[index] = bri;

}

}

if (thres_ticker > 0) {

// draw motion center blob

target_x = motion_center_x / thres_ticker;

target_y = motion_center_y / thres_ticker;

}

smooth_x = lerp(smooth_x, target_x, 0.02);

smooth_y = lerp(smooth_y, target_y, 0.02);

fill(22, 22, 222);

ellipse(smooth_x, smooth_y, 42, 42);

}

motion detection / heatmap buffer method

basic heatmap motion detection – all pixel difference is stored in buffer array to be visualized

let gsx = 128;

let gsy = 96;

let xscl = 1;

let yscl = 1;

let video;

let pmtx = [];

// motion detection vars

let motion_threshold = 30;

let motion_center_x = 0;

let motion_center_y = 0;

let target_x = 0;

let target_y = 0;

let smooth_x = 0;

let smooth_y = 0;

let heatmap; // heatmap image

let heatmap_data = [];

function setup() {

createCanvas(window.innerWidth, window.innerHeight);

heatmap = createGraphics(gsx, gsy); // 32 x 24

heatmap_data = new Array(gsx * gsy);

init_heatmap_data();

pmtx = new Array(gsx * gsy);

xscl = width / gsx;

yscl = height / gsy;

video = createCapture(VIDEO);

video.size(gsx, gsy);

video.hide();

}

function draw() {

let thres_ticker = 0; // ticker for square sum of diff pixels

motion_center_x = 0; // reset center

motion_center_y = 0;

//& draw_heatmap_data();

render_heatmap();

video.loadPixels();

noStroke();

for (let x = 0; x < gsx; x++) {

for (let y = 0; y < gsy; y++) {

var index = (video.width - x - 1 + y * video.width) * 4;

var i = int(index/4);

var r = video.pixels[index + 0];

var g = video.pixels[index + 1];

var b = video.pixels[index + 2];

var bri = (r + g + b) / 3;

let xpos = x * xscl;

let ypos = y * yscl;

let diff = pmtx[i] - bri;

if (diff > motion_threshold) {

if(diff!=null){

heatmap_data[i] += int(diff)*2.;

}

fill(diff * 13);

motion_center_x += xpos;

motion_center_y += ypos;

thres_ticker++;

} else {

fill(0);

}

heatmap_data[i] *= 0.97;

//ellipse(xpos+xscl/2, ypos+yscl/2, xscl*.3, xscl*.3);

pmtx[i] = bri;

}

}

if (thres_ticker > 0) {

// draw motion center blob

target_x = motion_center_x / thres_ticker;

target_y = motion_center_y / thres_ticker;

}

smooth_x = lerp(smooth_x, target_x, 0.02);

smooth_y = lerp(smooth_y, target_y, 0.02);

}

function render_heatmap() {

heatmap.loadPixels();

for (let i = 0; i < heatmap_data.length*4; i += 4) {

let ireal = int(i/4);

heatmap.pixels[i] = heatmap_data[ireal];

heatmap.pixels[i + 1] = heatmap_data[ireal];

heatmap.pixels[i + 2] = heatmap_data[ireal];

heatmap.pixels[i + 3] = 155;

}

heatmap.updatePixels();

//heatmap.smooth(2);

image(heatmap,0,0,width,height);

}

function init_heatmap_data(){

for (let i = 0; i < heatmap_data.length; i++) {

heatmap_data[i] = 1;

}

for (let i = 0; i < pmtx.length; i++) {

pmtx[i] = 1;

}

}

todo – implement voxel 3D with shader > https://www.shadertoy.com/view/NtfXWS

color blob detection

/*

<!DOCTYPE html>

<html>

<head>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/p5.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/addons/p5.dom.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/addons/p5.sound.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/tracking.js/1.1.3/tracking-min.js" integrity="sha256-tTzggcG45kvlQd/lMp1iI1SR4R0vC4Cx9/J1GegZ32A=" crossorigin="anonymous"></script>

<link rel="stylesheet" type="text/css" href="style.css">

<meta charset="utf-8" />

</head>

<body>

<script src="sketch.js"></script>

</body>

</html>

*/

var colors;

var capture;

var trackingData;

function setup() {

createCanvas(windowWidth,windowHeight)

capture = createCapture(VIDEO); //capture the webcam

capture.position(0,0) //move the capture to the top left

capture.style('opacity',0.5)// use this to hide the capture later on (change to 0 to hide)...

capture.id("myVideo"); //give the capture an ID so we can use it in the tracker below.

// colors = new tracking.ColorTracker(['magenta', 'cyan', 'yellow']);

colors = new tracking.ColorTracker(['yellow']);

tracking.track('#myVideo', colors); // start the tracking of the colors above on the camera in p5

//start detecting the tracking

colors.on('track', function(event) { //this happens each time the tracking happens

trackingData = event.data // break the trackingjs data into a global so we can access it with p5

});

}

function draw() {

// console.log(trackingData);

if(trackingData){ //if there is tracking data to look at, then...

for (var i = 0; i < trackingData.length; i++) { //loop through each of the detected colors

// console.log( trackingData[i] )

rect(trackingData[i].x,trackingData[i].y,trackingData[i].width,trackingData[i].height)

}

}

}

ai based tracking

https://editor.p5js.org/ml5/sketches/PoseNet_webcam