Blender is a quiet common 3D program today. In combination with genAi, we can use it to guide generative renderings …

Blender is a quiet common 3D program today. In combination with genAi, we can use it to guide generative renderings in a surprisingly precise manner. Fine 3D details or lighting is not needed for this approach as we work with foremost depth information and rudimentary color info.

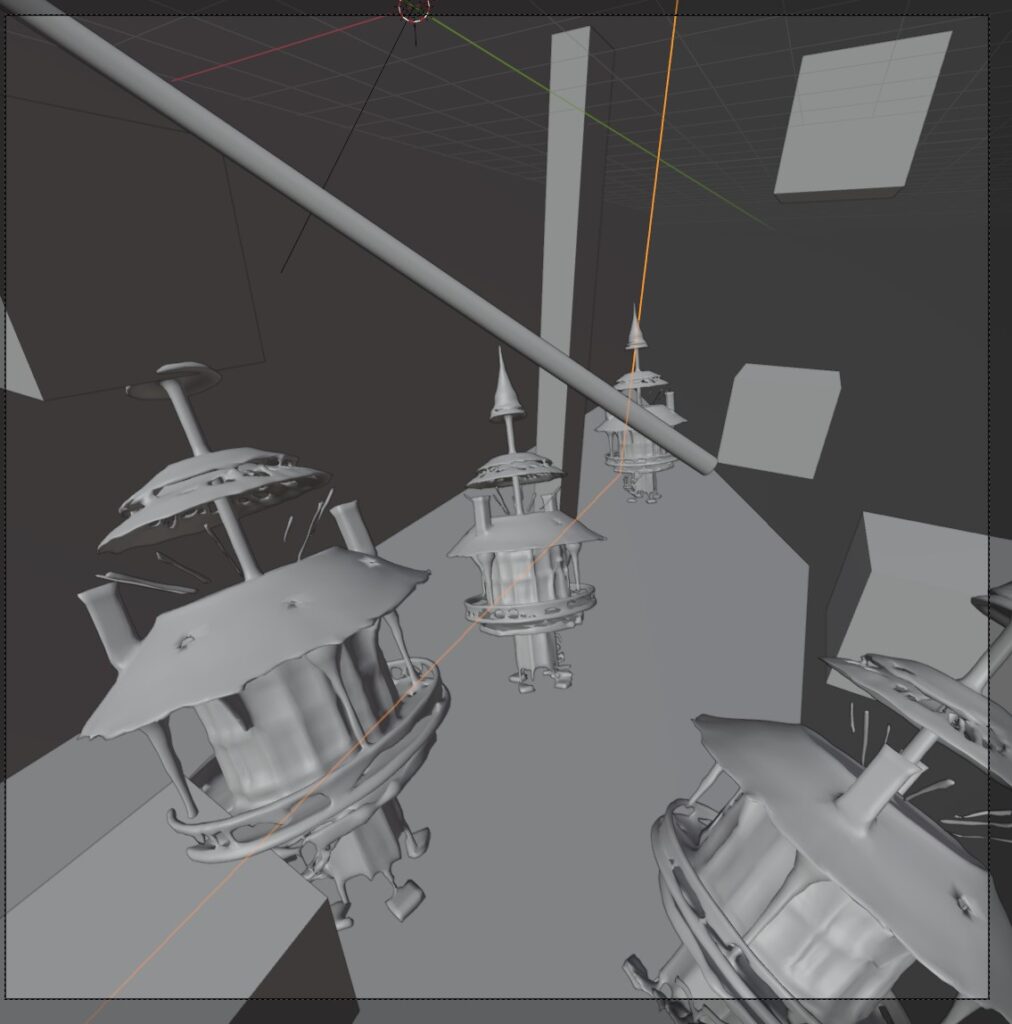

basic manual pipeline

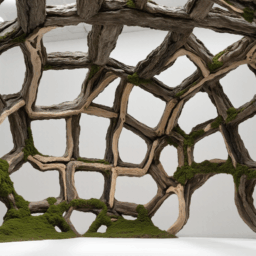

The fundamental basic approach is to simply create a 3D scene of your choice. Use any camera angle or primitive or model as you like. Render a prefered shot from this scene and drop it into ControlNet/DepthNet. With the help of the DepthAnything model, we can distillate depth information from this image to guide a custom generative rendering from this.

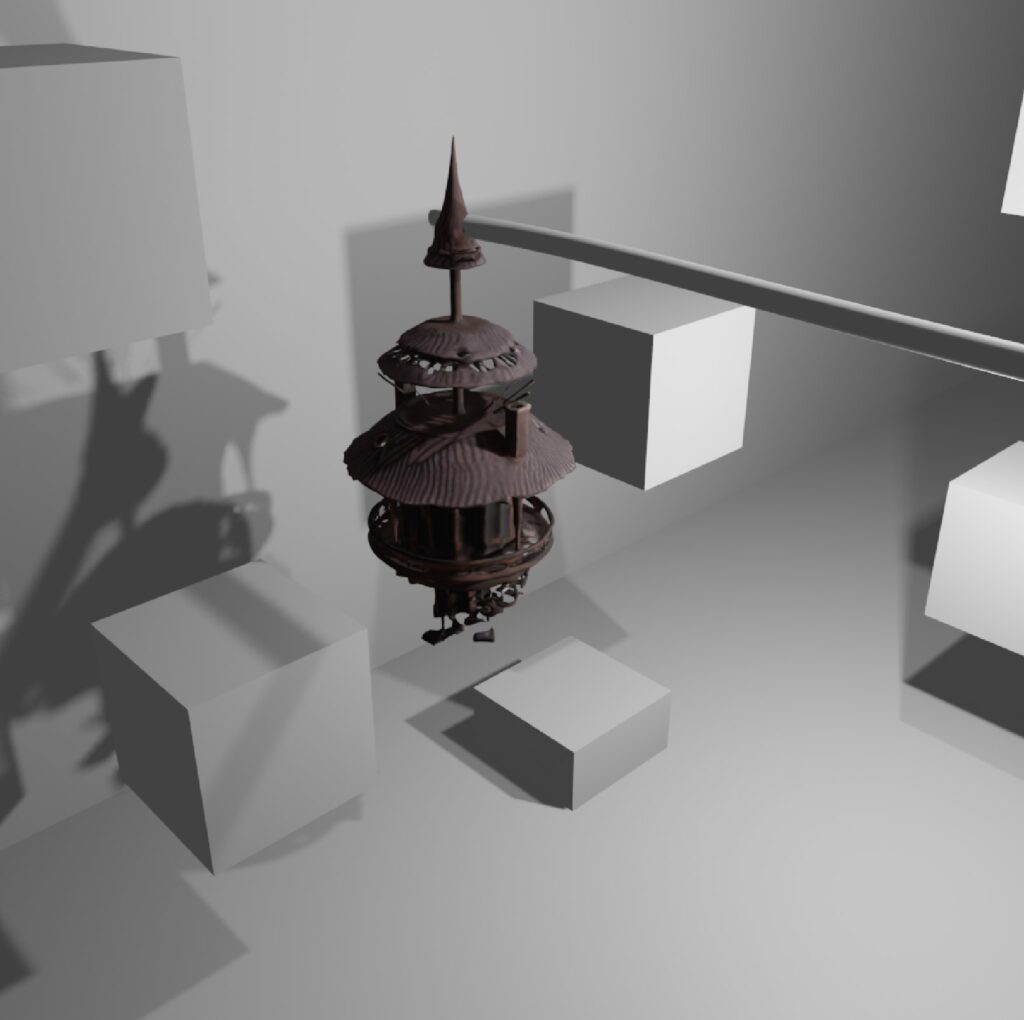

bright studio photo of black concrete blocks, white concrete wall

bright studio photo of black concrete blocks, white concrete wall, white floor, wooden detailed lantern on the wall , white background

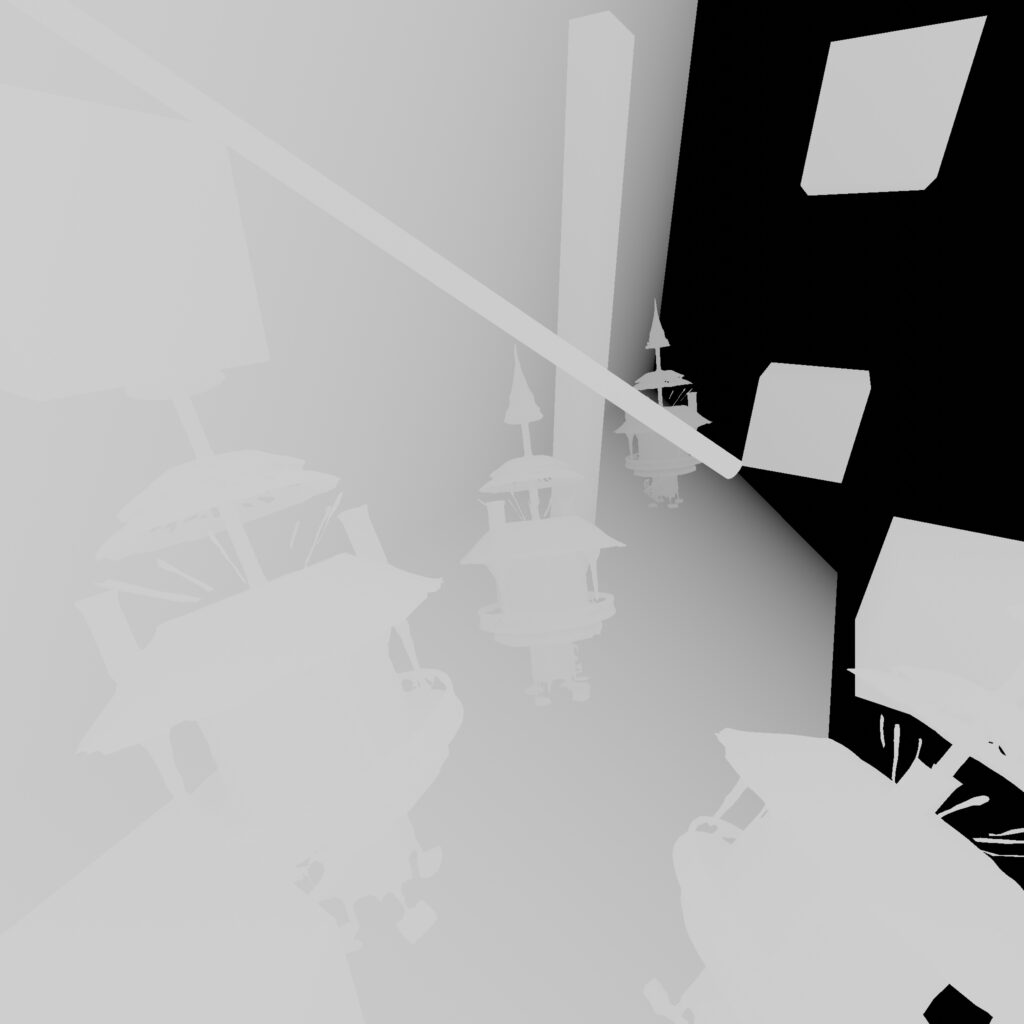

create custom Depthmap

In Blender, we can create pretty precise DepthMaps natively, as we already deal with 3D geometry here 🙂 – Instead of using AI to generate depth info ( Depth Anything ) we take this manual approach, to have more control on the specific details if needed. Follow along the quiet basic tutorial by ArtByAdRock

finetune the DepthMap

As a result, we can utilize our custom depthmaps and finetune them to peel out the right details.

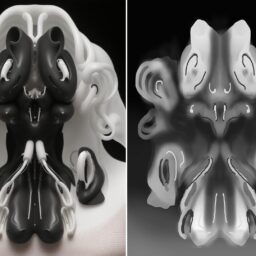

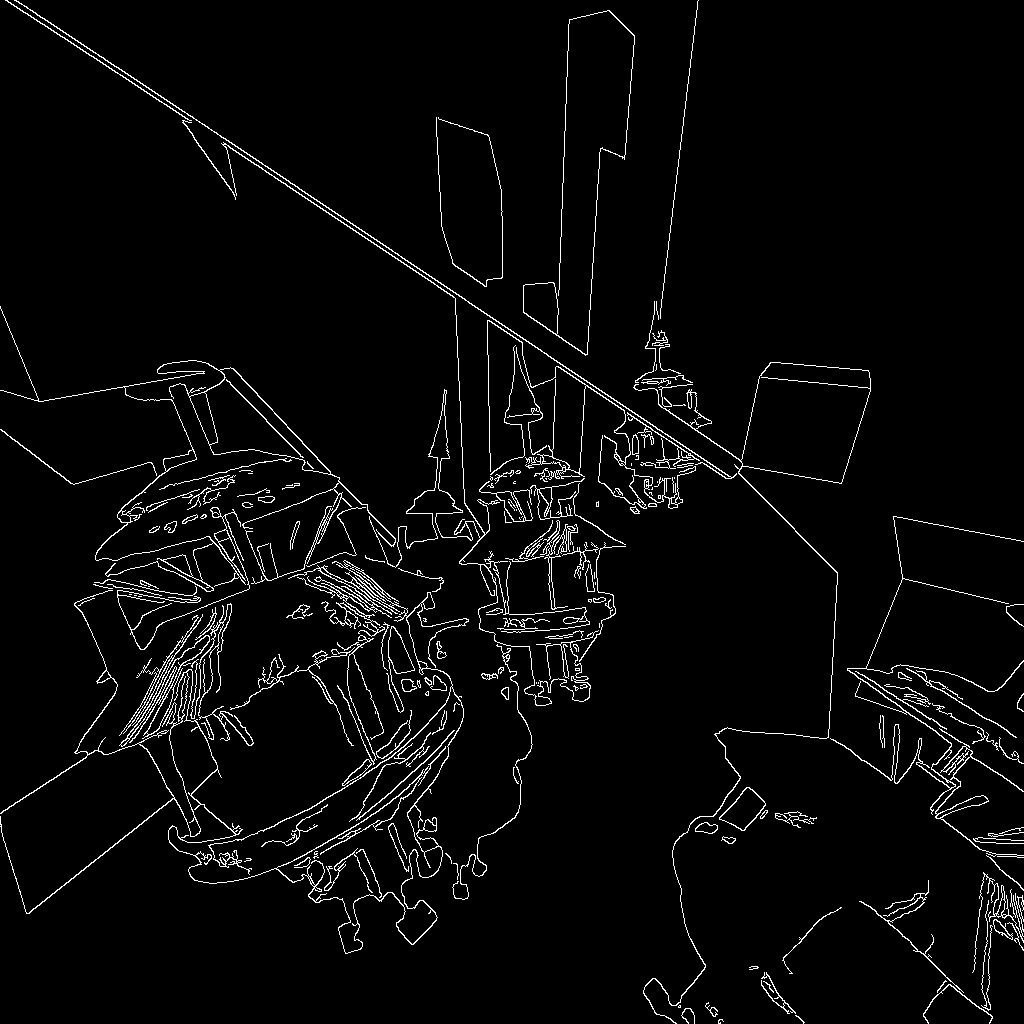

Fine details through CannyNet

Additionally, we can use another ControlNet / Canny to focus more on the edges and contours if they seem lost. For this, we use the standard rendered image.

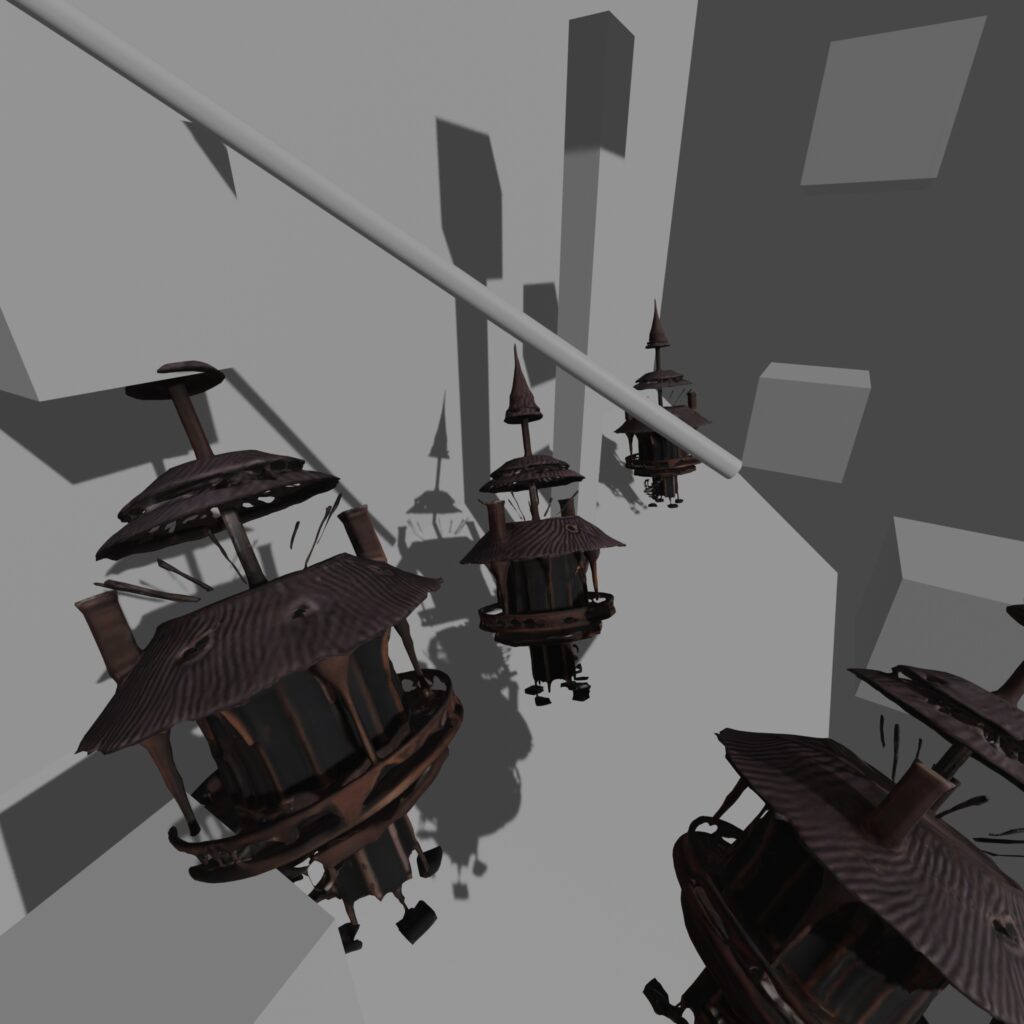

Variation in rendering results

realistic photo of( multiple wooden detailed ancient dirty used lanterns like little houses made from old wood with candles candlelight inside:1.1), grey dirty used wet concrete walls and blocks, ( flooded floor with black dark water:1.1), dark night, depth of field

realistic photo of( colorful candy toys miniature figures and buildings, in wild childrens room playground, white fluffy clouds : 1.2), depth of field, pastel color palette, high contrast, hard shadows, glossy

Video interpolation

Based on a proper keyframe we generated from this workflow, we can use common video interpolation engines to animate narratives in these scenes. For now, this is quiet experimental, as we do not have proper controls to narrate this video creation.